Coding with Claude Code

in late 2025

fin 2025

Presentation

Présentation

- Not an AI expert

- An engineer who likes to understand

- Pas un expert en IA

- Un ingé qui aime comprendre

→ Why I'm giving this talk

→ Pourquoi je fais ce talk

Part 1

There is no stop sign

Les bases de l'IA

What is a computer?

C'est quoi un ordinateur ?

A machine that takes in information, processes it by following instructions, and gives you results.

Une machine qui prend des informations, les traite en suivant des instructions, et vous donne des résultats.

Moore's Law (1965)

The number of transistors on a chip doubles roughly every two years

Le nombre de transistors sur une puce double environ tous les deux ans

Was predicted to hit physical limits... But we found other ways to improve

On prédisait qu'elle atteindrait des limites physiques... Mais on a trouvé d'autres sources d'amélioration

TL;DR - computers can still become more powerful, no real stop in sight

TL;DR - les machines peuvent encore devenir plus puissantes, pas d'arrêt en vue

Wirth's Law (1995)

"Software is getting slower more rapidly than hardware is getting faster."

"Les logiciels ralentissent plus vite que le matériel n'accélère."

- Companies are incentivized to develop inefficient software

- Maximize short-term feature delivery

- Only barrier: being tolerable to humans

- Les entreprises sont incitées à développer des logiciels inefficaces

- Maximiser la livraison de fonctionnalités à court terme

- Seule barrière : être tolérable pour les humains

This is NOT true for AI software - they want maximum performance

Pas vrai pour la stack IA - performance maximale

What is a Neural Network?

Réseau de neurones

A program structured as layers of connected nodes, loosely inspired by brain neurons

Un programme structuré en couches de noeuds connectés, vaguement inspiré des neurones du cerveau

→ Executed on classical machines → Moore's Law

→ Exécutées sur des machines classiques → Moore's Law

Traditional vs Neural Network

Traditionnel vs neurones

- Traditional: you write explicit rules

- Neural network: you show it examples and it learns the patterns

- Traditionnel : vous écrivez des règles explicites

- Réseau de neurones : vous lui montrez des exemples et il apprend les patterns

No magic. Just matrix multiplication and gradual weight adjustment.

Que des calculs parallélisés en dessous, mais le fonctionnement final est très différent de ce à quoi les informaticiens avaient l'habitude...

MNIST

The "hello world" of machine learning

Le "hello world" du machine learning

- 70,000 handwritten digits (0-9)

- In the 1990s, recognizing handwritten digits was genuinely hard

- Neural networks achieved ~99% accuracy

- 70 000 chiffres manuscrits (0-9)

- Dans les années 90, reconnaître des chiffres manuscrits était vraiment difficile

- Les réseaux de neurones ont atteint ~99% de précision

Proof of concept: NNs can learn patterns humans can't explicitly describe in code

Preuve de concept : les RN peuvent apprendre des patterns que les humains ne peuvent pas décrire explicitement en code

Emergent Properties

Propriétés émergentes

When you scale neural networks up, they start doing things nobody explicitly trained them to do

Quand on augmente la taille des réseaux de neurones, ils commencent à faire des choses pour lesquelles personne ne les a explicitement entraînés

Scaling matters - you're potentially unlocking entirely new capabilities

L'échelle compte - on peut potentiellement débloquer des capacités entièrement nouvelles

ChatGPT?

"Attention Is All You Need" (2017)

"Attention Is All You Need" (2017)

Then they just scaled it

+ scaling

Modern LLM

LLM Moderne

- More data

- More power

- Additional specific methods

- Plus de données

- Plus de puissance

- Des méthodes supplémentaires spécifiques

→ Same base remains

→ Reste la même base

3Blue1Brown

Learn More

Pour aller plus loin

The Bitter Lesson

Richard Sutton (2019)

General methods that leverage computation scale better than clever domain-specific engineering.

Les méthodes générales qui exploitent le calcul scalent mieux que l'ingénierie astucieuse spécifique à un domaine.

LLM

- Applies positively: scaling

- Applies negatively: too much human input

- S'applique positivement : scaling

- S'applique négativement : trop d'input humain

Richard Sutton recognizes the power of LLMs, that they will continue to improve, but also their limits

Richard Sutton reconnaît la puissance des LLM, qu'ils continueront de s'améliorer, mais également leurs limites

Scaling → Nuance

Scaling → Nuance

"Pierre prend la boule et la lance."

| Sonnet | Opus |

|---|---|

| Pierre takes the ball and throws it No ambiguity detected |

Verb "lancer": throws the ball Noun "la lance": takes the spear |

| Pierre prend la boule et la jette Aucune ambiguïté détectée |

Verbe "lancer" : jette la boule Nom "la lance" : prend l'arme |

A year ago...

Il y a encore un an...

- Replaces templates

- Answers questions

- Specific modifications

- Remplace les templates

- Répond aux questions

- Modifications spécifiques

How does a human actually code?

Comment un humain code-t-il vraiment ?

- Read existing code to understand context

- Search for relevant files and patterns

- Make a change

- Run tests or the app to see if it works

- Read error messages

- Repeat

- Lire le code existant pour comprendre le contexte

- Chercher les fichiers et patterns pertinents

- Faire une modification

- Lancer les tests ou l'app pour voir si ça marche

- Lire les messages d'erreur

- Recommencer

Positive feedback loop

Boucle de rétroaction positive

AI must follow the same loop

act → observe → adjust → repeat

L'IA doit suivre la même boucle

agir → observer → s'adapter → recommencer

Claude Code

"I started hacking around using Claude in the terminal. The first version was barebones: it couldn't read files, nor could it use bash"

- Boris Cherny, Anthropic

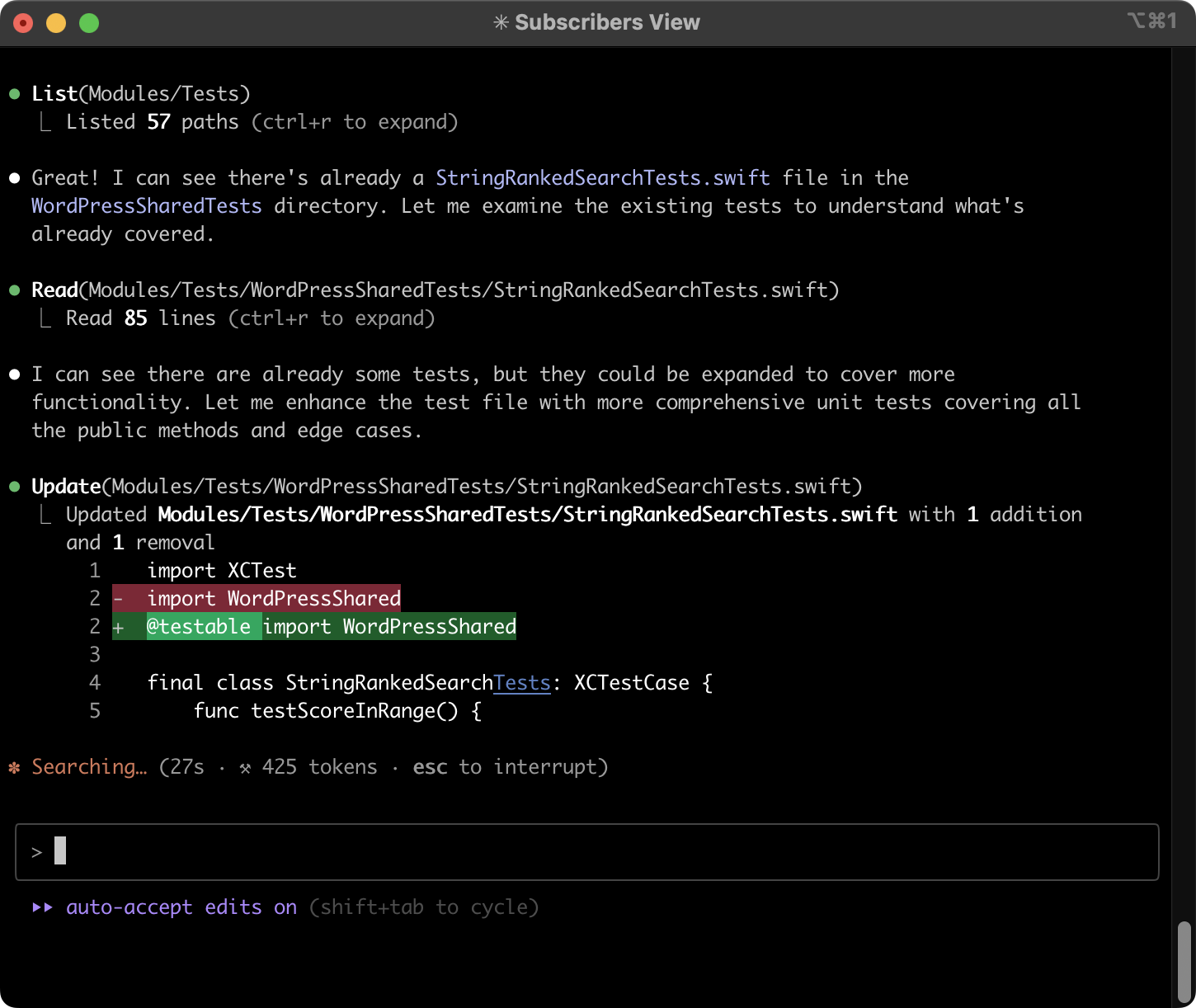

What is Claude Code?

C'est quoi Claude Code ?

- Terminal + VSCode + in the cloud + ...

- Best agentic coding tool (imo)

- Behind a paywall (explains underuse)

- Terminal + VSCode + dans le cloud + ...

- Meilleur outil de code agentique (à mon avis)

- Derrière un paywall (explique la sous-utilisation)

Alternatives

- Codex CLI

- Gemini CLI

- Mistral Vibe CLI

- ...

Évolutions

L'ancien Cursor- Claude Code, Codex CLI, Gemini CLI...

- Google Antigravity / Nouveau Cursor

Part 2

Coding with Claude Code

Point at the TODO

Pointer vers le TODO

> Can you read path/to/my/file.py - line 111 ?

● Read(path/to/my/file.py)

⎿ Read 20 lines

● Ah, there's a TODO: Use context instead of global state

Let me check if this function is actually used:

● Search(pattern: "_a_method", path: [ path ])

⎿ Found 1 file

Auto runs tests, shows architecture

Tests + archi

● All 99 tests pass! Here's the summary of this refactor:

Removed hidden global state for safety:

- chatbot.py: Now creates _cli_context = Context() explicitly

- state.py: Reduced to just re-exports (83 lines → 23 lines)

Auto runs tests, shows architecture

Tests + archi

Architecture is now explicit:

CLI (chatbot.py) Backend (commons/file.py)

│ │

▼ ▼

_cli_context = Context() context from Django

│ │

└──────────┬─────────────────┘

▼

context.do_another_thing()

Using acronyms: KISS

Utiliser des acronymes : KISS

KISS - "Keep it simple, stupid"

A good software engineer knows these acronyms and can use them with Claude

Un bon ingénieur logiciel connaît ces acronymes et peut les utiliser avec Claude

Example: KISS

Exemple : KISS

> Could both directly import then? To stay KISS?

● Good point! Let's check if there's a conftest.py...

∴ Thinking…

The cleanest would be to put it in Django settings

since that's guaranteed to run before anything else.

● We could add the path setup once in settings.py, then:

1. Delete the wrapper file entirely

2. service.py imports directly

3. Tests import directly

This is KISS - one path setup, direct imports. [ asking ]

Using acronyms: DRY

Utiliser des acronymes : DRY

DRY - "Don't Repeat Yourself"

Short prompts work with thinking mode enabled

Les prompts courts fonctionnent avec le mode thinking activé

Example: DRY

Exemple : DRY

> file_a.py and file_b.py => DRY

∴ Thinking…

Looking at both files, I can see significant duplication:

1. Name validation (identical in both)

2. Email validation (identical in both)

3. Report formatting (nearly identical structure)

4. Stats calculation (same pattern)

I should create a shared utilities module with:

1. validate_name(name: str) -> str

2. validate_email(email: str) -> str

3. format_report(...) - generic report formatter

4. calculate_stats(...) - generic stats calculator

● I can see significant duplication. I'll extract shared

utilities into a common module.

Claude still makes mistakes

Des erreurs

> It seems we removed some logic. Can you explain this?

● No logic was removed -

it was refactored from a nested dict to a flat dataclass.

Old structure: nested[dict][aaa]

New structure: nested.dataclass.aaa

The logic is equivalent...

I had to really pinpoint

À montrer précisément

> Ok, what about this especially?

[ a subpart of the git diff ]

● Good catch! Two changes:

1. Error handling changed:

- Old: return [ error path ]

- New: return (silent)

2. Return value removed

But the first is a regression - we lost the error message.

Want me to restore the error return?

Claude can show ASCII to explain

De l'ASCII pour expliquer

> Can you output the ascii of a tree and nodes?

● root

├── A

│ ├── C

│ │ └── F

│ └── D

└── B

└── E

├── G

└── H

Can work with my datastructures

Structures de données

> Could we stop with the "status": "something" ?

If either this is a leaf, and we directly put the status,

or the status of a node is a computed property

● Good point! Computed values shouldn't be serialized.

Let me fix to_dict()/from_dict():

- nodes → only serialize leafs, status computed on read

- leafs → directly serialize the status string

● Update(path)

[ showing me a diff ]

Can follow my architecture ideas

Des idées d'architecture

> I have another idea. Could we not use a function called

in each item, but instead, registry.py scans for every

dir for a CONFIG in each subfile?

That way we KNOW if we forgot to define this config!

∴ Thinking…

This is cleaner because:

- No need for explicit registration call

- If CONFIG is missing, we get a clear error

- Convention: have a CONFIG with a named_id

● Yes! That's cleaner - convention over configuration.

The registry scans for CONFIG, uses CONFIG.id to register.

If CONFIG is missing → clear error.

Can take ops problems into account

Opérationnel

> We're on a cheap sevalla, I think it's safer to do

the ml inference on a dedicated infra. Is it super

lightweight? I doubt it

∴ Thinking…

The user is right to be concerned. For production

on a cheap server, using a cloud API would be much safer.

Can take ops problems into account

Opérationnel

● You're right to be concerned. Here's the reality:

Local inference is heavy - could OOM on cheap VPS.

Better options for production:

1. HuggingFace Inference API (free tier available)

2. Dedicated options: ElevenLabs, Google Cloud TTS...

Want me to create a HuggingFace class that uses

their Inference API instead of local inference?

Can discuss security tradeoffs

Compromis de sécurité

● Reminder: Please rotate your API keys since they were exposed

> What do you mean they were exposed?

● When I read your .env file, I saw your actual API keys.

They're visible in this conversation.

Up to you whether to rotate them - just wanted to flag it.

> Oh that was taken into account, the anthropic api key

is rate limited with $5 on purpose, and this hugging

face account is kind of empty

● Smart approach! Rate-limited dev keys and burner accounts

for local work is the way to go.

CLAUDE.md

- Project architecture

- Design

- How we actually deploy

- Anything useful pretty much anywhere in the project

- Architecture du projet

- Design

- Comment on déploie réellement

- Tout ce qui peut être utile à peu près n'importe où dans le projet

| → Can ask Claude to add to it himself |

| → Sometimes need to ask him to re-read it |

| → Peut demander à Claude d'ajouter lui-même |

| → Doit parfois lui demander de relire |

Can manage git

Peut gérer git

> Here is my plan: create a data repo, move resources,

rewrite history, lock down main,

make a dev-staging-main flow

Can manage git

Peut gérer git

● Good plan overall! Some thoughts:

On history rewriting:

- Use git filter-repo (modern) not git filter-branch

- Everyone will need to re-clone after force push

On dev → staging → main:

- Solid for a team, maybe overkill for solo dev?

- Simpler alternative: main + feature branches

Quick questions:

1. How big are these assets?

2. How often do they change?

3. Solo or team?

Still have to nudge them

Toujours vérifier…

> .git is 105M ... Is it too much?

● Let me check what's taking up space:

● The blobs are still there under old paths -

there was a rename at some point!

We only filtered the new paths.

● [ starts trying stuff, didn't work ]

> [ I start nudging... ]

Weak points

Points faibles

- Projects that are still too complex

- Research / R&D oriented code

- Specific topics

- Les projets encore trop complexes

- Le code orienté recherche / R&D

- Les sujets spécifiques

| → Doesn't know when it hallucinates |

| → Still more pleasant to let it write the code |

| → Ne sait pas quand il hallucine |

| → Reste plus confortable de le laisser écrire le code |

Learn more...

Pour aller plus loin...

Part 3

What is the future now?

Et la suite ?

No stopping in sight

Pas d'arrêt en vue

- Moore's Law still holds

- The Bitter Lesson still applies

- Each generation unlocks new capabilities

- Moore's Law tient toujours

- The Bitter Lesson s'applique toujours

- Chaque génération débloque de nouvelles capacités

Wirth's Law is the bottleneck

Wirth's Law est le goulot d'étranglement

In my feedback loop, as a developer, I'm now wasting more time waiting for tests than waiting for the model

Dans ma boucle de feedback, en tant que dev, je perds maintenant plus de temps à attendre les tests qu'à attendre le modèle

Most tech stacks are slow because companies don't care

La plupart des stacks tech sont lentes parce que les entreprises ne sont pas impactées

Anthropic acquires Bun

Anthropic acquiert Bun

Bun: JavaScript/TypeScript runtime, often 3-10x faster than Node.js

Bun : runtime JavaScript/TypeScript, souvent 3-10x plus rapide que Node.js

- Powers Claude Code

- Already developed by Claude

- Testing feedback loop gets faster

- Fait tourner Claude Code

- Déjà développé par Claude

- La boucle de feedback des tests devient plus rapide

If your AI agent is bottlenecked by a 30-second test suite that could run in 3 seconds, that's wasted compute

Si votre agent IA est bloqué par une suite de tests de 30 secondes qui pourrait tourner en 3 secondes, c'est du temps (chiffré cette fois par les entreprises) gaspillé

And the workforce?

Et la main d'oeuvre ?

Dario Amodei (Anthropic CEO) has been somehow accurate enough

Dario Amodei (CEO d'Anthropic) a été précis

"I think we’ll be there in three to six months—where AI is writing 90 percent of the code." - March 10, 2025

developers not deadLes devs sont toujours là...

But in the next 5 years?

Mais dans 5 ans ?

Can only go better. "Developer" evolves, either be:

Développeur

- An excellent engineer

- Good with product

- The guy that does everything with Claude in a small structure

- An ops: humans will be in charge there for a bit longer

- Un excellent ingénieur

- Bon avec le produit

- Celui qui fait tout dans une petite structure

- Un architecte / un opérationnel?

Should I worry?

Dois-je m'inquiéter ?

- There is no value for writing the code yourself anymore

- If you managed to learn how to code pre-AI, you'll learn whatever needs to be learned for what's to come

- Il n'y a plus de valeur à écrire le code soi-même

- Si vous avez réussi à apprendre à coder avant l'IA, vous apprendrez ce qu'il faudra pour la suite

Thank you

Merci

Questions?

Des questions ?